dropless moe|GitHub : Baguio MegaBlocks is a light-weight library for mixture-of-experts (MoE) training. The core of the system is efficient "dropless-MoE" ( dMoE , paper ) and standard MoE layers. . Batang Gapan. Para po sa 2nd Dose ng Moderna wait lang po tayo ng post sa page kung kailan po ang sched medyo na delay po kasi ang ating bakuna. Maraming Salamat po. 5. 1y. View 2 more replies. Lyssa Marie Angcona-Aguilar. Raymonte Aguilar . 1y. 1 Reply. Olette Francisco Ventura.

PH0 · megablocks · PyPI

PH1 · [2109.10465] Scalable and Efficient MoE Training for Multitask

PH2 · Towards Understanding Mixture of Experts in Deep Learning

PH3 · Sparse MoE as the New Dropout: Scaling Dense and Self

PH4 · MegaBlocks: Efficient Sparse Training with Mixture

PH5 · GitHub

PH6 · Efficient Mixtures of Experts with Block

PH7 · Aman's AI Journal • Primers • Mixture of Experts

PH8 · A self

Online Results; Online Payment; Book an Appointment; Find a Doctor; Online Results; Online Payment; Book an Appointment; Facebook Twitter. MakatiMed 24/7 OnCall: +632 8888 8999. . Makati Medical Center - Makati City, Philippines, Ground Floor Circular, Tower 1, Legazpi Village, Makati City. +632 8888 8999 local 2070; Service Hours.

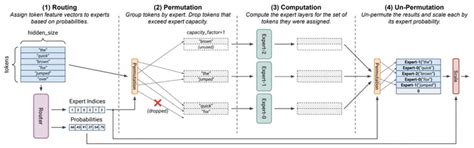

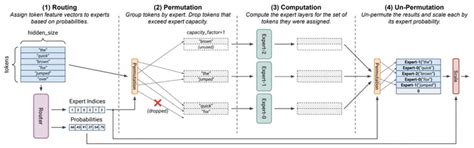

dropless moe*******MegaBlocks is a light-weight library for mixture-of-experts (MoE) training. The core of the system is efficient "dropless-MoE" (dMoE, paper) and standard MoE layers. .MegaBlocks is a light-weight library for mixture-of-experts (MoE) training. The core of the system is efficient "dropless-MoE" ( dMoE , paper ) and standard MoE layers. .• We show how the computation in an MoE layer can be expressed as block-sparse operations to accommodate imbalanced assignment of tokens to experts. We use this .

MegaBlocks is a light-weight library for mixture-of-experts (MoE) training. The core of the system is efficient "dropless-MoE" ( dMoE , paper ) and standard MoE layers. .MegaBlocks is a light-weight library for mixture-of-experts (MoE) training. The core of the system is efficient "dropless-MoE" ( dMoE , paper ) and standard MoE layers. MegaBlocks is built on top of Megatron-LM , where we support data, .

In contrast to competing algorithms, MegaBlocks dropless MoE allows us to scale up Transformer-based LLMs without the need for capacity factor or load balancing losses. .

GitHub Finally, also in 2022, “Dropless MoE” by Gale et al. reformulated sparse MoE as a block-sparse matrix multiplication, which allowed scaling up transformer models without the .The Mixture of Experts (MoE) models are an emerging class of sparsely activated deep learning models that have sublinear compute costs with respect to their parameters. In .

Abstract: Despite their remarkable achievement, gigantic transformers encounter significant drawbacks, including exorbitant computational and memory footprints during training, as .

Find a FULL Review of 888Bet Tanzania ☝ Check Out the Sports Section & Test the Casino Games ☑️ Find a 888BET TZ Login Link HERE . 👉 Get the App – To get the most out of 888 Bet TZ, you should first go through the 888Bet Tanzania download procedure. At the moment, this is only available for Android devices. With the app, you .

dropless moe|GitHub